Over the last few weeks, AcesoUnderGlass has been posting a series about how to research things effectively. This culminated with her Knowledge Bootstrapping Steps v0.1 guide to turning questions into answers. To a first approximation, I think this skill is the thing that lets people succeed in life. If you know how to answer your own questions, you can often figure out how to do any of the other things you need to do.

Given how important this is, it seemed totally worth experimenting with her method to see if it would work for me. I picked a small topic that I’d been meaning to learn about in detail for years. I used the Knowledge Bootstrapping method to learn about it, and paid a lot of attention to my experience of the process. You can see the output of my research project here. Below is an overly long exploration of my experience researching and writing that blog post.

How I used to learn vs Knowledge Bootstrapping

My learning method has changed wildly over the years. When I was in undergrad, I thought that going to lectures was how you learned things and I barely ever studied anything aside from my own notes. This worked fine for undergrad, but didn’t really prepare me to do any on-the-job learning or to gain new skills. I spent an embarrassingly long time after undergrad just throwing myself really hard at problems until I cracked them open. If I was presented with a project at work that I didn’t immediately know how to do (and that a brief googling didn’t turn up solutions for), then I would just try everything I could think of until I figured something out. That usually worked eventually, but took a long time and involved a lot of dumb mistakes. And when it didn’t work I was left stuck and feeling like I was a failure as a person.

When I went back to college to get a Master’s degree, I knew I couldn’t keep doing that. I had visions of getting a PhD., so I thought I’d be doing original research for the next few years. I had to get good at learning stuff outside of lectures. My approach to this was to say: what did my undergrad professors always tell me to do? Read the textbooks.

So in grad school I got good at reading textbooks, and I always read them cover-to-cover. I didn’t really get good at reading papers, or talking to people about their research or approaches. Just reading textbooks. This was great for the first two years of grad school, which were mostly just taking more interesting classes. I did a few research projects and helped out in my lab quite a bit, but I don’t think I managed to contribute anything very new or novel via my own research. I ended up leaving after my Master’s for a variety of reasons, but I now wonder if going through with the PhD would have forced me learn a new research method.

Since grad school, my approach to learning new things, answering questions, and doing research has been a mix of all three methods I’ve used. I’ll read whatever textbooks seem applicable from cover to cover, I’ll throw myself at problems over and over until I manage to beat them into submission, and I’ll watch a lot of lectures on youtube. All of these methods have one thing in common: they take a lot of time. Now that I have a family, I just don’t have the time that I need to keep making the progress I want.

This is why I was so interested in AcesoUnderGlass’s research method. If it worked, it would make it so much easier to do the things I was already trying to do.

Knowledge Bootstrapping Method

My (current) understanding of her method is that you:

- figure out what big question you want to answer

- break that big question down into smaller questions, each of which feed into the answer to the big question

- repeat step 2 until you get to concrete questions that you could feasibly answer through simple research

- read books that would answer your concrete questions

- synthesize what you learned from all the books into answers to each of your questions, working back up to your original big question

This is a very question-centered approach, which contrasts significantly with my past approaches. It seems obvious that breaking a problem down like this would be helpful, and honestly I do a lot of problem-reductionism during my beat-it-into-submission attempts. Is this all there is to her super-effective research method?

Elizabeth spends a lot of time on the right way to take notes, going so far as to show templates for how she does it. When I first saw this, I thought it would be useful but not critical. As I’ll discuss below, I now think some of the templating does a lot of heavy lifting in her method.

Furthermore, she based her system around Roam. I’ve been hearing an enormous amount about Roam over the past year or so. People in the rationality community seem enamoured of it. At this point it mostly seems like a web-based zettelkasten to me, and I already use Zettlr. Zettlr is also free, and stores data locally (my preference). It’s intended to be zettelkasten software, but I mostly just use it to journal in and I’m not very familiar with many of zettelkasten features of it. I decided to use that for my project, since it’s already where I write most of my non-work writings and it seems comparable to Roam.

Elizabeth shared several sample pages from her own Roam research. When I browsed Elizabeth’s roam it seemed super slow. I assume there’s something going on with loading up pages on somebody else’s account that makes it slower, as the responsiveness seemed unusable to me.

Ironically, zettlr crashed on me right after I posted my research results to my blog. I ended up having to uninstall it completely to install a new version in order to get it working again.

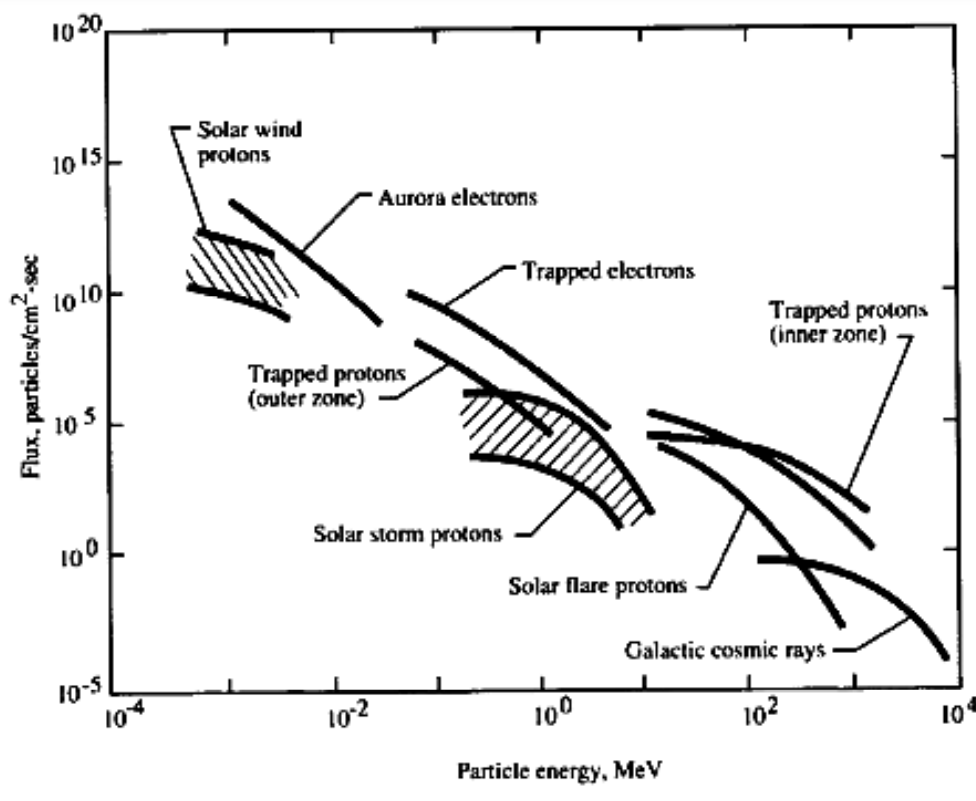

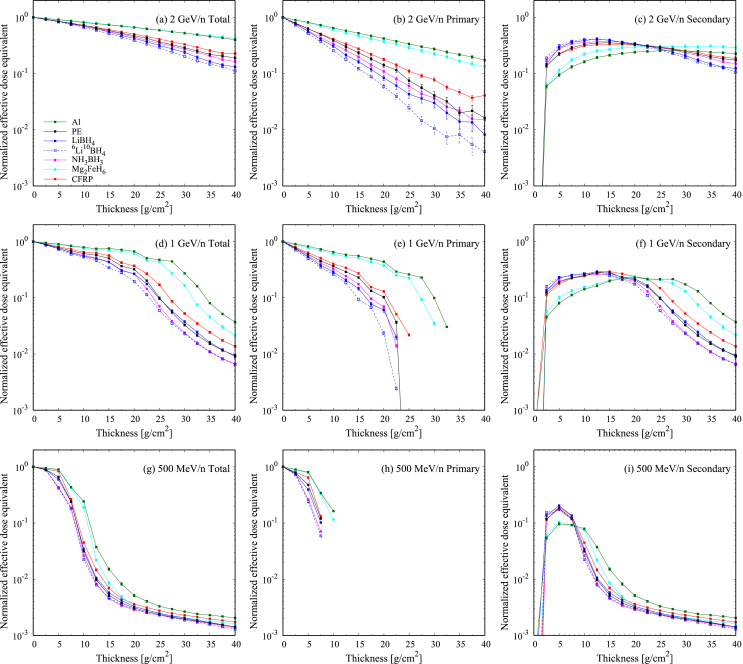

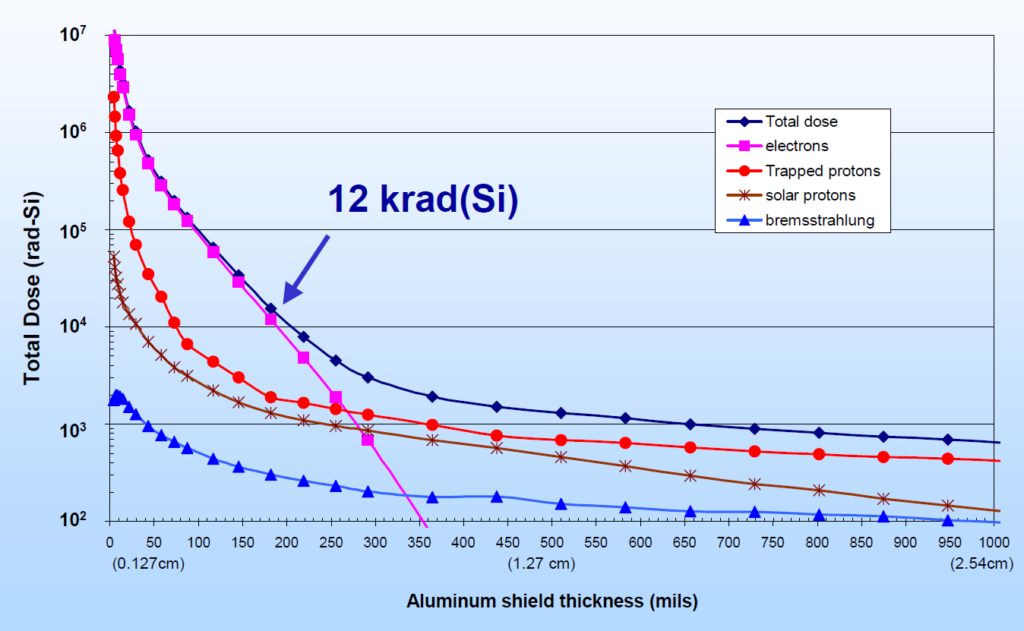

Questions

For my test project, I wanted to do a small investigation to see what Knowledge Bootstrapping was like. Elizabeth gives a couple of her own examples that involve answering pretty large and contentious questions. I picked something small just to get a sense for the method, and decided to learn how people protect electronics from radiation in space. I’m interested in this topic just as a curiosity, but it’s also useful to have a good understanding of it for my job (even though I’m mostly doing software in space these days).

I want to talk a bit about why that choice ended up being better than any alternative that past-me could have chosen.

The rationality and effective-altruism communities have infected me with save-the-world memes. People deeper in those communities than me seem to express those memes in different ways, but there’s definitely a common sense of needing to work on the biggest and most important problems.

This particular meme has been a net-negative for me so far. Over the past few years, I sometimes asked myself what I should do with my life, or what my next learning project should be, or what my five year plan should be. I approached those questions from a first principles mindset. I would basically say to myself “what is utopia? How do I get there? And how do I do that?” and try to backwards chain from this very vague thing that I didn’t really understand.

This never worked, and I’d always get stuck trying to sketch out how the space economy works in 2200 instead of chaining back to a question that’s useful to answer now. Because I was approaching this project with the mindset of “let’s experiment with something small to see how Elizabeth’s advice works”, I just took a question I was curious about and that would be useful to answer for work. That was amazingly helpful, and I now think that when choosing top level questions I should go with what I’m curious about and what feels useful, and just avoid trying to come up with any sort of “best” question.

The crucial thing here seems to be learning something that feels interesting and useful, instead of learning something that you feel like you should learn. I still think doing highly impactful things is important, but I’m left a bit confused about how to do that. The thing I’ve been doing to try to figure what’s most important has been sapping my energy and making me feel less interested in doing stuff, which is obviously counterproductive.

Question Decompositions

Decomposing my main question into sub-questions was a straightforward process. It took about five minutes to do, and those sub-questions ended up guiding a lot of my research and writing.

One failure mode that I have with reading books and papers is that it can be hard to mentally engage with them. This is one of the reasons that I have tended to read textbooks cover-to-cover. It makes it easier to engage with each section because I know how it relates to the overall content of the book. When I’ve tried reading only a chapter or section of a book, new notation and terminology has often frustrated me to the point of giving up or just deciding to read the whole thing.

Having the viewpoint of each of my sub-questions let me side-step that issue. For each paper, I could just quickly skim it to find the points that were relevant to my actual questions. Unknown notation and terminology became much easier to handle, because I knew I didn’t have to handle all of it. If something didn’t bear directly on one of my sub-questions (say because it dealt more with solar cycles than with IC interactions), I could safely skip it. If it was important, I knew I only had to read enough to understand the important parts, and that bounded task helped me to keep my motivation up.

When I finished reading some paper, it was always clear what my next step was. I just go back to my list of questions and see which ones are still not answered. Sometimes the answer to one would create a few new questions, which I would just add to my list.

This also explains why breaking the question down into parts at the beginning is more useful than the decomposition I do when I’m debugging something. By starting with a complete structure of what I think I don’t know, I have context to think about everything I read. That lets me pick up useful information quicker, because it’s more obvious that it’s useful. I’ve had numerous debugging experiences realizing that a blog post I read a week ago actually did contain an unnoticed solution to my problem. By starting with a question scaffold, I think I could speed that process up.

Sources

Elizabeth emphasizes the use of books to answer the questions you come up with. She spends at least one blog post just covering how to find good books. I suspect that this is a bigger problem for topics that are more contentious. The question that I was trying to answer is mostly about physics, and I didn’t have to worry too much about people trying to give me wrong information or sell me something.

I also didn’t particularly want to read 12 books to answer my question, so I decided at the beginning that I’d focus on papers instead. Those tend to be faster to read, and I thought they’d also be more useful (though if I could find the exact right book, it might have answered all my questions in one fell swoop).

I did have some trouble finding solid papers. Standard google searches often turned up press releases or educational materials that NASA made for 6th graders. Those didn’t have the level of detail I needed to really answer my low level questions.

So my method for finding sources was mainly to do scattershot google searches, and then google scholar searches. My search terms were refined the more I read, and I tweaked them depending on which specific sub-question I was trying to answer. When I found a good paper, I would sometimes look at the papers it cited (but honestly I did this less than might have been useful).

In general I think I learned the least from this aspect of the project. Part of this might be that my question just didn’t require as much information seeking expertise as some of the questions that Elizabeth was working on. Part of me wants to do another, slightly larger, Knowledge Bootstrapping experiment where I address a question that is less clear cut or more political.

One thing I did notice while I was doing the research was that a part of me sometimes didn’t want to look things up. It wanted to answer the questions I posed via first principles, and the idea of just looking up a table of data seemed like cheating. This reluctance may come from a self-image I have of someone who can figure things out. Looking things up may challenge that self-image, leading me to think less of myself. I think this is a pretty damaging strategy, though it may explain a bit of my old beat-it-into-submission method of solving technical problems. I think it might be useful to explicitly identify to myself if I’m trying to finish a project or to challenge myself. If I’m facing a challenge question, then working it through on my own is noble. If I’m trying to finish a project, then I’m just wasting time. I’d like to not have moral or shame associations in either case.

Reading and Notes

Reading and note-taking are definitely where the Knowledge Bootstrapping process really shines. Being able to efficiently pull information out of text can be difficult, and Elizabeth uses even more structure for this than I think she realizes (or at least more than Knowledge Bootstrapping makes explicit).

Her strategy for note-taking is:

- make a new page for each source using a specific template

- fill in a bunch of meta-data about the source

- brain-dump everything you already think about the source

- the explicit purpose for this is that it gets the thoughts out of your head, letting you actually focus on the source information

- I suspect that a large part of the benefit is that you explicitly predict what the source will say, making it easier to notice when it says something different. That surprise is likely the key to new information

- fill in the source’s outline (I never did this step)

- fill in notes for each section

Elizabeth’s recommendation is that if you’re not sure what to write down in the notes, you should go back to your questions and break them down further. I can confirm that after I had all my questions broken out, it was very easy to figure out what was relevant. This also made it easier to skim the source and to skip sections. I knew at a quick glance whether a chapter or section was related to my main question and had no compunctions about skipping around.

This is a pretty big difference to how I normally read things. I tend to be a completionist when I read, and I definitely feel an aversion to skimming or skipping content normally. In the past, I’d feel the need to read an entire source document in order to say whether it was “good” or if I “liked it”. I had a sense that if I didn’t read every word, I couldn’t tell people that I’d read it. And if I couldn’t tell people that I’d read it I wouldn’t get status points for it or something. Maybe there’s something here about reading not for knowledge but for status and identity.

The knowledge that I was reading for a specific purpose was very freeing, and I felt much more flexible with what I could read or not read.

In any case, I felt comfortable reading the sources just to pick out information, and I felt comfortable with my ability to pick out whatever information was important. What I was less comfortable with was recording that information in a useful way.

This is the main place that I would have liked more information from Knowledge Bootstrapping. When I looked at Elizabeth’s Roam examples, I was blown away by the structure of her notes. It’s not just well organized at a section level, each individual paragraph is well-tagged with claim/synthesis/Implication annotations. She also carefully records page numbers from the book for everything. There are also a lot of searchable tags that link different books together.

I don’t use Roam, so the immediate un-intuitiveness is likely one of the reasons that I find this so impressive. The amount of effort that she puts into her notes is kind of staggering, and I find them much closer to literal art than my own stream-of-consciousness rambling.

The thing is, my own stream-of-consciousness, concise notes are driven by a desire for efficiency. I don’t particularly want to stop and write down page numbers every couple of pages of a book. I’m certain that she gets a lot of benefit from it in terms of being able to review things later, I’m just not sure it would be worth it for me.

This is where my inexperience with my own tools, zettlr and markdown, really hampered me. I’m pretty sure I could get zettlr to do most of what Roam was doing, and maybe even do it efficiently and speedily. To get there, I would have had to stop doing one research project and start another research project on just using zettlr.

I would love to watch Elizabeth take notes on a chapter in real time, to see more of what her actual workflow is like. How much effort does she really expend in those notes, and does it seem worth it to me? Would it seem worth it to me for a more ambitious project? I think watching that would also help me learn the method a lot better than reading about how she does notes, as it would be directly tied to a research project already.

Synthesis

Synthesizing notes into answers to questions was conceptually easy, but logistically I was limited by the same inexperience with my tools as I was when I was taking the notes themselves. Before I do another research project, I want to learn more about using Zettlr (or another tool if I choose to switch) to make citations and cross-post connections.

During this research project, I would often take notes on a source into two different posts at the same time. I’d be putting all my notes directly into the notes doc for that source, then I would switch to my questions doc and start adding some data there immediately.

I noticed while doing my research project that I at times wanted to construct an argument between a couple of sources. “X says x, Y says y, how do I use both these ideas to answer my question?” I found actually doing this to be annoying, and I ended up not really doing much of it in my notes or in my synthesis.

That type of conversation between sources is one of the great strengths of the erstwhile Slate Star Codex (as well as many other blogs I love), so I want to encourage it in my own writing. I don’t normally do that by default, so having it seem desirable here seems like a strength of the method. Before I do something like this again, I’d want to remove whatever barriers made me averse to doing that kind of synthesis.

This is the first time that I’ve appreciated the qualitative differences between different citation styles. Prior to this, when I would write a paper or report, I’d just throw a link or title into a references section while I was writing and clean it up later. I’d pick whatever citation style was called for by the journal/class that I’d be submitting the paper to. I treated citations (and citation style) like something that was getting in the way of writing a paper and figuring things out.

Taking notes (and later synthesizing them) from a question-centered perspective showed me why citations are useful beyond just crediting others. If I were comfortable with an easy to use citation style (AuthorYear?), i could refer to the sources that way in my notes and in my synthesis docs and more easily create the type of “X says, Y says” conversations between sources that I think are so useful.

That seems to be the root of my aversion to doing this type of source vs source conversation. I knew I was going to post a blog with my synthesis, and the idea of going back and fixing all the citations into a coherent style made me not want to do it in the first place.

Elizabeth recommends writing out the answers to your sub questions in the same doc as the questions themselves. Step 9 of her extended process description is just “Change title of page to Synthesis: My Conclusion” because your questions now all have answers. I found this advice to be very helpful. I would sometimes get tired of just reading and note taking, and feel like I should be done. Then I’d go write up the answers to my questions, and in doing so I’d come to a point that I couldn’t really explain yet. That would re-energize me, as suddenly there was an interesting question to address. The act of organizing all the things I’d read about helped me focus on why I was interested in the question in the first place as well as what I still didn’t understand. This aspect of the process, creating questions and then long-form answering them in my own words, seems to cause me to automatically do the Feynman Technique.

KB and me

I liked this experiment. I learned what I wanted to learn on an object level, and doing it felt more free and curiosity driven than a lot of my reading and learning. I think regardless of what I do for future learning projects, I’ll definitely do the question decomposition part of KB again. I’m not quite sure about using the note-taking structure of the method; I’ll need to experiment with it a bit more.

I do think that I’d want to know better how to use my tools before I do the next project like this. For this first project, I had the excitement of doing a new thing a new way to keep me doing the method. I think once the excitement of a new method wears off, the friction of notetaking could stop me from doing it if I didn’t get good at markdown and zettlr/Roam first.

Honestly, I think one of the greatest benefits to this project was the introspection of trying to figure out how well I was learning. If I hadn’t been paying attention to my own thoughts and motivations, this project could have produced similar understanding of my original question without really giving me any information about how I learn or what emotional blocks were contributing to poor learning methods. That’s not really a part of Knowledge Bootstrapping on it’s own, but maybe having a backburner process running in my mind asking how my learning is going would help me more than it would slow me down.