I eat a lot of eggs. Maybe three times a week, I’ll make myself an egg burrito for breakfast. I also tend to eat a lot of omelets for dinner. That’s six eggs a week for breakfasts, plus maybe four more for dinners. I eat a lot of eggs, which means I got through a lot of egg cartons.

In the mornings, when I’m standing bleary eyed in front of the stove waiting for my eggs to cook, I often amuse myself by placing the remaining eggs into the carton in some symmetric fashion. I do this so often that I’ve started eating even numbers of eggs at a time, just to make sure I can put away the rest of the eggs symmetrically.

When I started doing this, I generally just tried to arrange them with symmetry about the x-axis. In this case, the x-axis divides a dozen egg carton in half hot-dog style (even though I eat lots of eggs, I don’t really buy the larger cartons). Arranging eggs in this manner is easy, because all you do is organize the bottom row in the same way as the top row.

Organizing eggs symmetrically got me thinking about how many ways I could actually pack them. For x-axis symmetry, I really only care about the top row. After I set the top row, the bottom row is uniquely defined. So if I have some even number of eggs, the number of ways I can put half of them into the top row is the same as the number of ways I can organize the eggs symmetrically.

It turns out that counting the number of ways I can put (for example) 2 eggs into 6 spots is given by the choose function. The choose function, (n choose k), is defined as the number of ways that you can pick k things from n possibilities. That’s exactly what I’m doing when I put eggs into a carton: I’m choosing 2 out of 6 possible places to put eggs.

This means that if I have 2n eggs left, for 0<=n<=6, there are (6 choose n) ways of organizing the eggs symmetrically. If I try every possibility each morning starting on the day that I got them (not on the day I first ate them) then I’ll have tried (6 choose 6) + (6 choose 5) + … = sum from i = 0 to 6 of (6 choose i).

Plugging this into Python, I get:

>>> from math import *

>>> sum = 0

>>> for i in range(7):

... sum += factorial(6)/(factorial(i)*factorial(6-i))

...

>>> sum

64

This is 64 possible combinations, just for vertical symmetry.

After a few months of eating eggs, I’d pretty much covered all of those. To make it a bit more interesting, I decided to go for horizontal symmetry instead. You still end up dividing the eggs and placing half of them in the left side of the carton. The right side is just a mirror of the first. At first, this seemed like it would be more interesting because instead of one row of eggs, I actually have three rows of two.

Unfortunately, the math works out exactly the same. There are still only six positions to place the eggs. This time the six are on one side, rather than on the top. While the positions might look slightly more interesting, there are the same number of them.

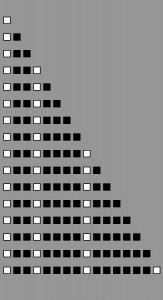

The last place to look for interesting symmetries in my dozen-egg carton is rotational. In this case, the carton would look the same if I rotated it 180 degrees. This only happens if the top right quadrant is the mirror of the bottom left. Same goes for top left and bottom right. So I’ve got my even number of eggs, and I can then divide them and put them however I want in the left quadrants, which defines the positions in the two right quadrants.

This is exactly the same as with both other forms of symmetry; the only change is how the new egg positions are defined.

The last thing that I’m interested in here is the combination of all three forms of symmetry. A carton packing that is symmetric about the x-axis and y-axis is also symmetric about the origin, but not all packings that are symmetric about the origin are also symmetric about both axes.

To create symmetry about both axes, we only have choices in a single quadrant. Once one quadrant is packed, all three other quadrants are explicitly defined. This means that the total number of eggs has to be divisible by 4, not just 2. We can only do this with 12, 8, 4, and 0 eggs.

With 12 eggs and 0 eggs, there isn’t even a choice. Both those options give us a single method of creating symmetries. The 8 egg option means that there will be two eggs in each quadrant, for a total of (3 choose 2) symmetry options. The 4 egg option means that there’s one egg in each quadrant, giving us (3 choose 1) symmetry options.

(3 choose 2) = 3

and

(3 choose 1) = 3

This is because if you’re putting one object into three places, there are three possible ways to do it. If you’re putting two objects into three places, that’s the same as leaving one object out of three places. Thus the two are the same.

The total number of carton packings that are symmetric about both axes and the origin is then 1 + 1 + 3 + 3, or 8.

Now that I’ve actually worked out how many symmetries there are, I may get around to writing a program to generate them all. Sounds like I’m going to get more practice with Processing.