After a lot of flailing, I’ve finally found a method of learning from ChatGPT (or other LLMs) that’s helpful for complex subjects.

In the past few years, I’ve gotten a lot of use from LLMs for small bounded problems. It’s been great if I have a compiler error, or need to know some factoid about sharks, or just want to check an email for social niceties before I send it.

Where I’ve struggled is in using it to learn something complicated. Not just a piece of syntax or a fact, but a whole discipline. For a while, my test of a new LLM was to try to learn about beta-voltaic batteries from it. This was interesting, and I did learn a bit. The problem was that I felt adrift and didn’t know how to go past a few responses to dig into what really mattered in the physics of the topic. It worked as a test of the LLM, but not for learning the topic.

Today I tried having ChatGPT teach me about SLAM, and it worked surprisingly well. The main difference was that I went in with an outline of what I wanted to learn. Specifically, I used this list of 100 interview questions. This gave me a sense of progress – I knew I was learning something relevant to my topic. It also gave me a sense of what done looked like – it was very obvious that I still had 46 questions to go, so I hadn’t explored everything yet.

My overall method was:

- go question by question

- Try to answer on my own

- pose the question to ChatGPT

- explore ChatGPT’s answer until I understood it fully

- Add new info to anki so I’d remember it later

What makes this work? The external sense of progress and done-ness certainly helped. Another aspect of this is that it was like an externally guided Feynman technique. I was constantly asking myself questions and trying to answer them. Then I compared my answer to an immediate nigh-expert-level answer.

This is better than books or papers (at least where the topic is already in the LLM), because I get an immediate answer without having to search through tens of pages. It’s maybe not better than talking to a real practicing expert, because a human expert might be better at prompting me with other questions or pointing out thorny issues that the LLM misses.

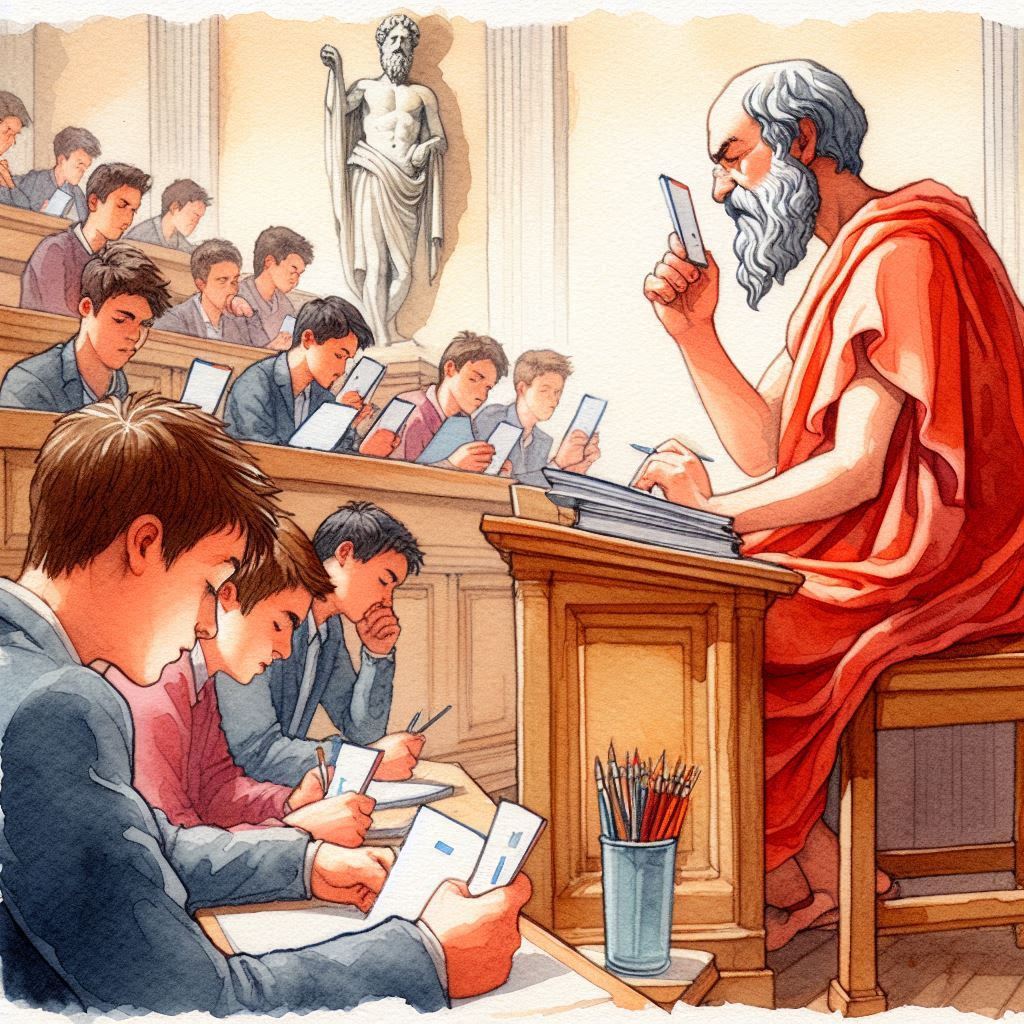

In fact, this whole process has made me realize how similar the Feynman technique is to a Socratic dialog. Probably the Socratic dialog is ideal, and the Feynman technique is what you fall back on when you don’t have access to a Socrates. My list of interview questions acted like a Socrates, priming the question pump for a ChatGPT fueled Feynman technique.

The obvious next question is how do I do this for a less structured topic? What if I don’t have a list of 100 questions to answer for a well studied and documented topic?

I have two ideas for this:

- Come up with a project (a program, an essay) that demonstrates that I know something. Then ask myself and the LLM questions until I’m able to complete the project.

- Get more comfortable having a conversational back and forth with an LLM. Asking it questions and prompting it to be more Socratic with me.

This reminds me a lot of my prior learning experiments. They had definite goals: write a blog post explaining some topic. Doing this required me to actually go deep and understand things, which my beta-voltaic explanation test for LLMs really didn’t. These ideas also force more question decomposition, which was one of the major benefits of my prior experiments too.

In fact, maybe the main takeaway here is that learning from an LLM can be done just by slotting the LLM into the “reading” step of the Knowledge Bootstrapping method.