Way back around 500 BC, the Athenians took part in a rebellion against the Persian King Darius. When Darius learned of it, he was furious. He was apparently so worried that he wouldn’t punish the Athenians that he had a servant remind him. Every evening at dinner, the servant was to interrupt him three times to say “remember the Athenians.”

There are a few people in my life that I’ve majorly changed my mind about. For most of them, I started off liking them quite a bit. Then I learn of something terrible that they’ve done, or they say something very mean to me, and I stop wanting to be friends with them.

Sometimes mutal friends have tried to intervene of their behalf. “Don’t hold a grudge,” they tell me.

I have to imagine that when people advise you not to hold a grudge, they’re imagining something like King Darius. If only Darius could stop reminding himself about the Athenian betrayal, he could forgive them and everything could go back to the way it was.

I don’t have calendar reminders to keep me from forgetting what people have done. I haven’t gone into my phone to delete anyone’s phone number.

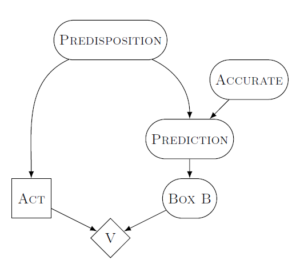

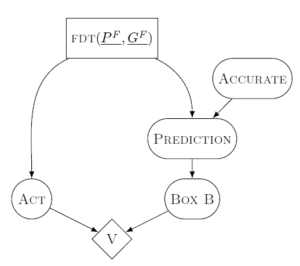

For me, the situation is very different. I may be consciously angry with some transgression for a while, but that emotion dissipates over the course of a few days to a few weeks. What really sticks with me is not the feeling of anger. It’s the change in my model of what that person is likely to do.

When I think of spending time with someone, I have some sense of what that time would be like. If that sense seems good, then I’m excited to hang out with them. If it seems bad, then I’m not. That sense is based on a model of who that person is, and what hanging out with them will be like. It’s not an explicit rehearsal of past times, good or bad.

Models

I try to think about the people I know as being their own person. The sign to me that I know someone well is that I can predict what they’ll care about, give them gifts that they find fun or useful, tell jokes or stories tailor-made for them, imagine their advice to me in a given situation.

The model that I have of someone impacts how much I choose to interact with them, and also in what ways I choose to interact with them.

I try to keep my model of a person up-to-date, since I know people change. Usually they change slowly, and I’m changing with them. Sometimes we grow closer as friends as we change.

Sometimes, I get new evidence about a person that dramatically changes my model of them. This is what it’s like for me if someone surprisingly treats me poorly. I get angry, then the anger fades and all that’s left is a changed model.

But there’s another thing that can change my models of people.

The way I think about people’s words and actions is filtered through how I think the world works. If my model for how the world works changes, then I might suddenly change how I view certain people. They haven’t done anything different than usual, but it now means a very different thing to me.

Forgiveness

When people tell me not to hold a grudge, I think that they want me to treat a person the way I treated them when I had an older model. This is impossible. I can’t erase the evidence that I now have about who they are as a person.

But the thing I need to keep in mind is that I can’t ever have all of the evidence necessary to know who another person is and what they’ll do. If someone screams at me over something, it’s very possible that they rarely yell and it was just a bad day for them. How do I incorporate that into my model?

This is where forgiveness comes in.

If someone does something that is really very bad to you, it may be the most salient feature of your model of them. The thing is, the other parts of your model of them are still valid.

Forgiveness is letting that vivid experience shrink to its proper size in your model. Depending on the event, that proper size may still be large. But by forgiving someone you give them the ability to change your model of them again. You’re letting them show you that they aren’t normally someone who would scream at you. You’re letting them show you that they have changed since then.

Forgiveness isn’t a thing that can be forced. The model that I have of a person isn’t a list that I keep in my head. My model of you isn’t some explicit verbal thing. It’s all the memories I have of you; it’s the felt sense that I get in my gut when I think of you. I can’t just decide that the felt sense is different now.

Forgiveness is a slow growing thing. I can choose to help it along, to feed it with thoughts of compassion and with evidence that my model may be off-base. But regardless of what I try to do, forgiveness takes time.

Apologies

If forgiveness is letting someone’s actions influence your model of them again, then it’s pretty clear that forgiveness isn’t all that is necessary.

In addition to me being willing to update my model of another person, they need to be giving me evidence of who they are. They need to be giving me information to refine my model of them again.

Apologies are one way of doing this. If someone says they’re sorry for something, then that’s some evidence (often weak) that they actually are different than an action made them seem. The best sort of apology then, is some kind of action that really brings home the fact that the person is different. It’s saying sorry, then acting in a way that prevents the transgression from happening again.

This also means that, in order for me to properly apologize to someone else, they need to actually be willing to hear me. Which is kind of a catch-22 in some ways.

I’ve definitely hurt some people with my words and actions in the past. There are a few people that just never want to talk with me again. That’s their right, but it means that I can’t properly apologize. They’ll never see the ways in which I have changed, and their model of me will remain stuck on a person that I’m not anymore.